Event-driven architectures have become widespread in recent years, with Kafka the de-facto standard for tooling.

This post provides a complete example of an event-driven architecture, implemented with two Java Spring Boot services that communicate via Kafka.

The main goal of this tutorial is to provide a working example without diving too deeply into details that can distract from getting something up and running quickly.

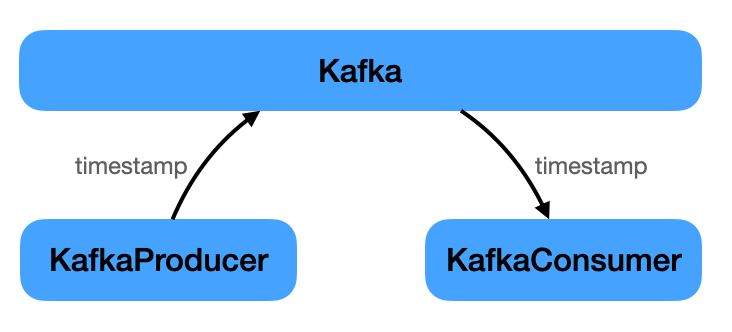

The project consists of a few building blocks:

- Infrastructure (Kafka, Zookeeper)

- Producer (Java Spring Boot service)

- Consumer (Java Spring Boot service)

The producer ’s only task is to periodically send an event to Kafka. The event simply carries a timestamp. The consumer listens for this event and prints the timestamp.

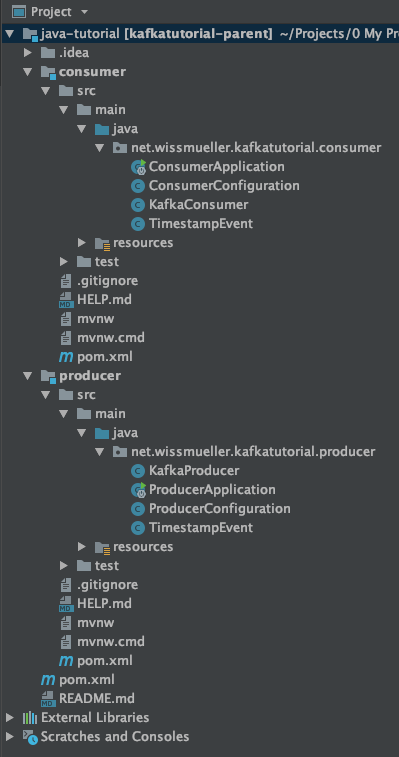

The implementation resulted in the following project structure.

The complete project code can be downloaded from here.

You can build this from the command line as explained below, or import it into an IDE such as IntelliJ.

Infrastructure

Only two components, besides the services, are needed to get an event-based architecture running: Kafka and Zookeeper.

See the resources section at the end of the tutorial for links to both.

Kafka is the main component for handling events; Zookeeper is required for several supporting functions. From the Zookeeper website:

ZooKeeper is a centralized service for maintaining configuration information, naming, providing distributed synchronization, and providing group services.

Below is the docker-compose.yml to get both up and running:

version: '3'

services:

kafka:

image: wurstmeister/kafka

container_name: kafka

ports:

- "9092:9092"

environment:

- KAFKA_ADVERTISED_HOST_NAME=127.0.0.1

- KAFKA_ADVERTISED_PORT=9092

- KAFKA_ZOOKEEPER_CONNECT=zookeeper:2181

depends_on:

- zookeeper

zookeeper:

image: wurstmeister/zookeeper

ports:

- "2181:2181"

environment:

- KAFKA_ADVERTISED_HOST_NAME=zookeeperWhen this is in place, only the two Java services are needed that implement the “business domain”— a very simple one: sending and receiving a timestamp.

Code setup

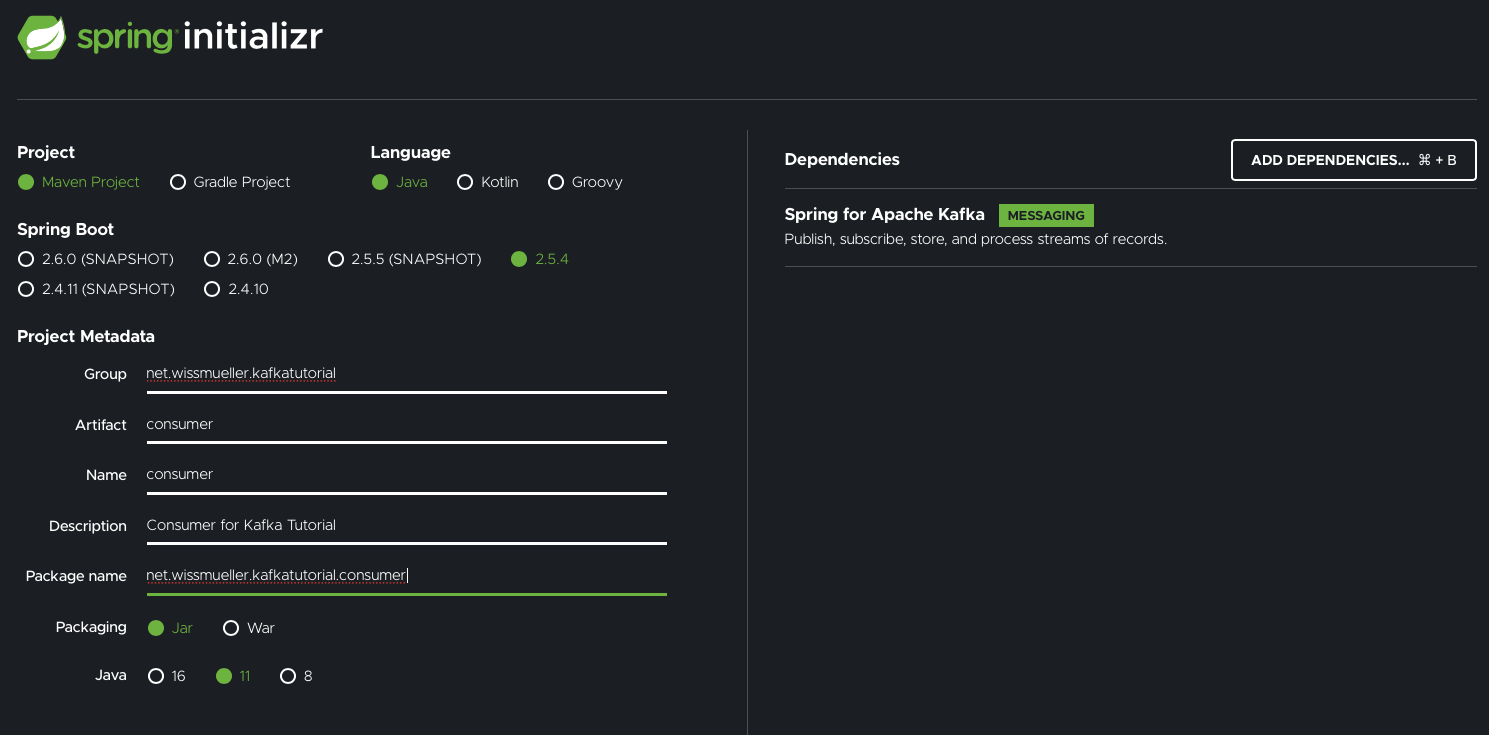

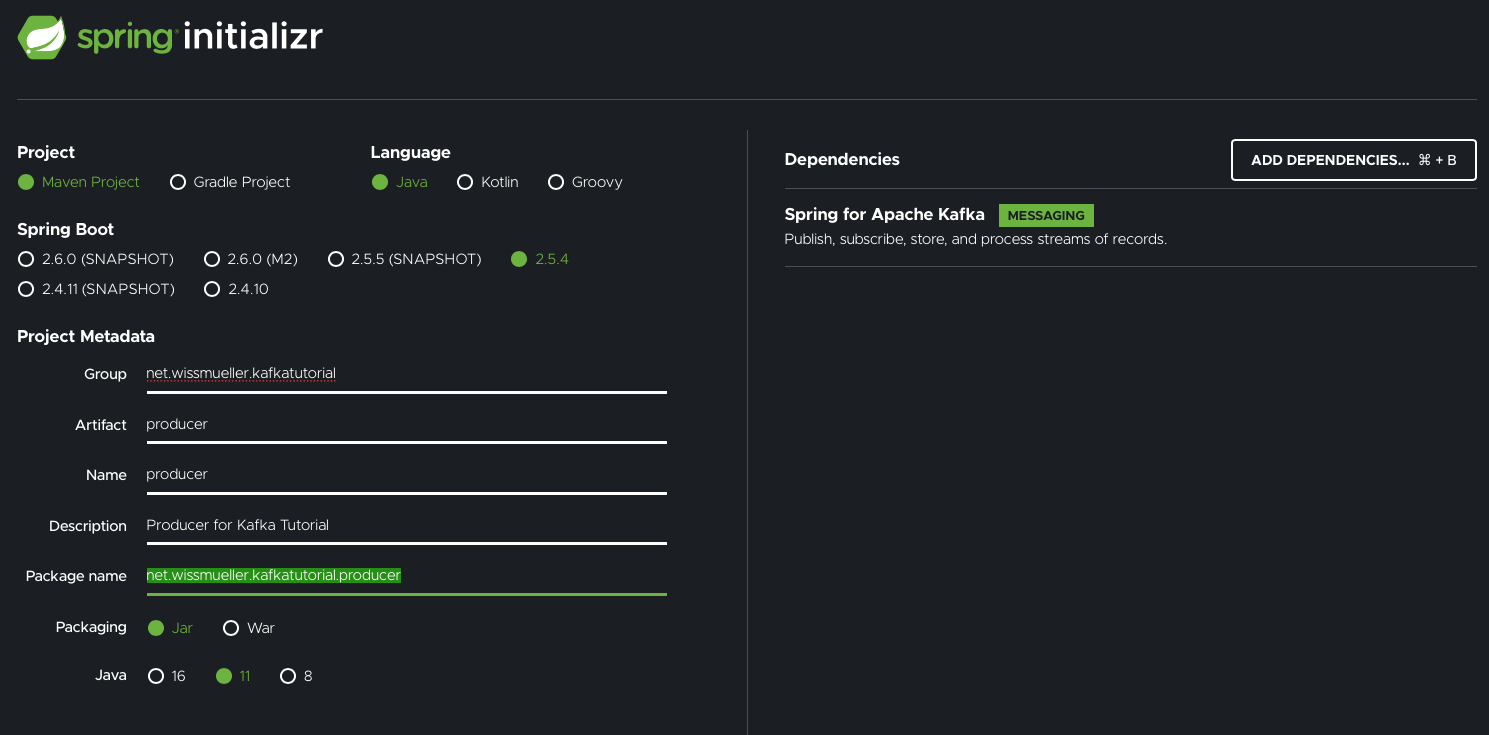

There is a practical website where you can create and initialize a Spring project with all required dependencies: Spring Initializr.

First, I created the producer application:

Next, the consumer application:

Note that I have already added the dependency for “Spring for Apache Kafka”.

After downloading and unpacking the project files, it was time to start implementing.

For both the producer and the consumer, four files are needed:

- The Application

- The Configuration

- The Producer (or the Consumer)

- The Properties file

What goes into those files is explained in the next two chapters. I am not going into the details here, because this tutorial is not meant to be an in-depth Kafka guide.

Producer

As mentioned above, the producer “produces” timestamps and sends them via Kafka to any interested consumers.

Everything starts with the application class in ProducerApplication.java, which has been left mostly unchanged. Only the @EnableScheduling annotation has been added, which is needed by the producer.

package net.wissmueller.kafkatutorial.producer;

// imports...

@SpringBootApplication

@EnableScheduling

public class ProducerApplication {

public static void main(String[] args) {

SpringApplication.run(ProducerApplication.class, args);

}

}Some configuration is needed, which I have placed in ProducerConfiguration.java.

We need to tell the producer where to find Kafka and which serialisers to use for the events. This is done in producerConfigs().

An event has a key and a value. For both we are using the String class. This is specified in kafkaTemplate().

We also need the topic on which to send the events. Therefore we have timestampTopic(), which returns a NewTopic.

package net.wissmueller.kafkatutorial.producer;

// imports...

public class ProducerConfiguration {

@Value("${kafka.bootstrap-servers}")

private String bootstrapServers;

@Bean

public Map<String, Object> producerConfigs() {

Map<String, Object> props = new HashMap<>();

props.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, bootstrapServers);

props.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class);

props.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, StringSerializer.class);

return props;

}

@Bean

public ProducerFactory<String, String> producerFactory() {

return new DefaultKafkaProducerFactory<>(producerConfigs());

}

@Bean

public KafkaTemplate<String, String> kafkaTemplate() {

return new KafkaTemplate<>(producerFactory());

}

@Bean

public NewTopic timestampTopic() {

return TopicBuilder.name("timestamp")

.build();

}

}This class requires some properties in the usual place: application.properties

spring.kafka.bootstrap-servers=localhost:9092

spring.kafka.consumer.group-id=tutorialGroup

The producer is in KafkaProducer.java:

package net.wissmueller.kafkatutorial.producer;

// imports...

@Component

public class KafkaProducer {

private static final Logger log = LoggerFactory.getLogger(KafkaProducer.class);

private static final SimpleDateFormat dateFormat = new SimpleDateFormat("HH:mm:ss");

private KafkaTemplate<String, String> kafkaTemplate;

KafkaProducer(KafkaTemplate<String, String> kafkaTemplate) {

this.kafkaTemplate = kafkaTemplate;

}

void sendMessage(String message, String topicName) {

kafkaTemplate.send(topicName, message);

}

@Scheduled(fixedRate = 5000)

public void reportCurrentTime() {

String timestamp = dateFormat.format(new Date());

sendMessage(timestamp, "timestamp");

log.info("Sent: {}", timestamp);

}

}This class is initialized with the KafkaTemplate.

In reportCurrentTime() the timestamp is sent to Kafka every 5 seconds, implemented via the @Scheduled annotation. This works only when @EnableScheduling is set in the application class.

That is all for the producer. On to the consumer…

Consumer

Now we receive the timestamps sent by the producer.

As with the producer, the entry point is the application class in ConsumerApplication.java. This file is unchanged from the version generated by the Spring Initializr.

package net.wissmueller.kafkatutorial.consumer;

// imports...

@SpringBootApplication

public class ConsumerApplication {

public static void main(String[] args) {

SpringApplication.run(ConsumerApplication.class, args);

}

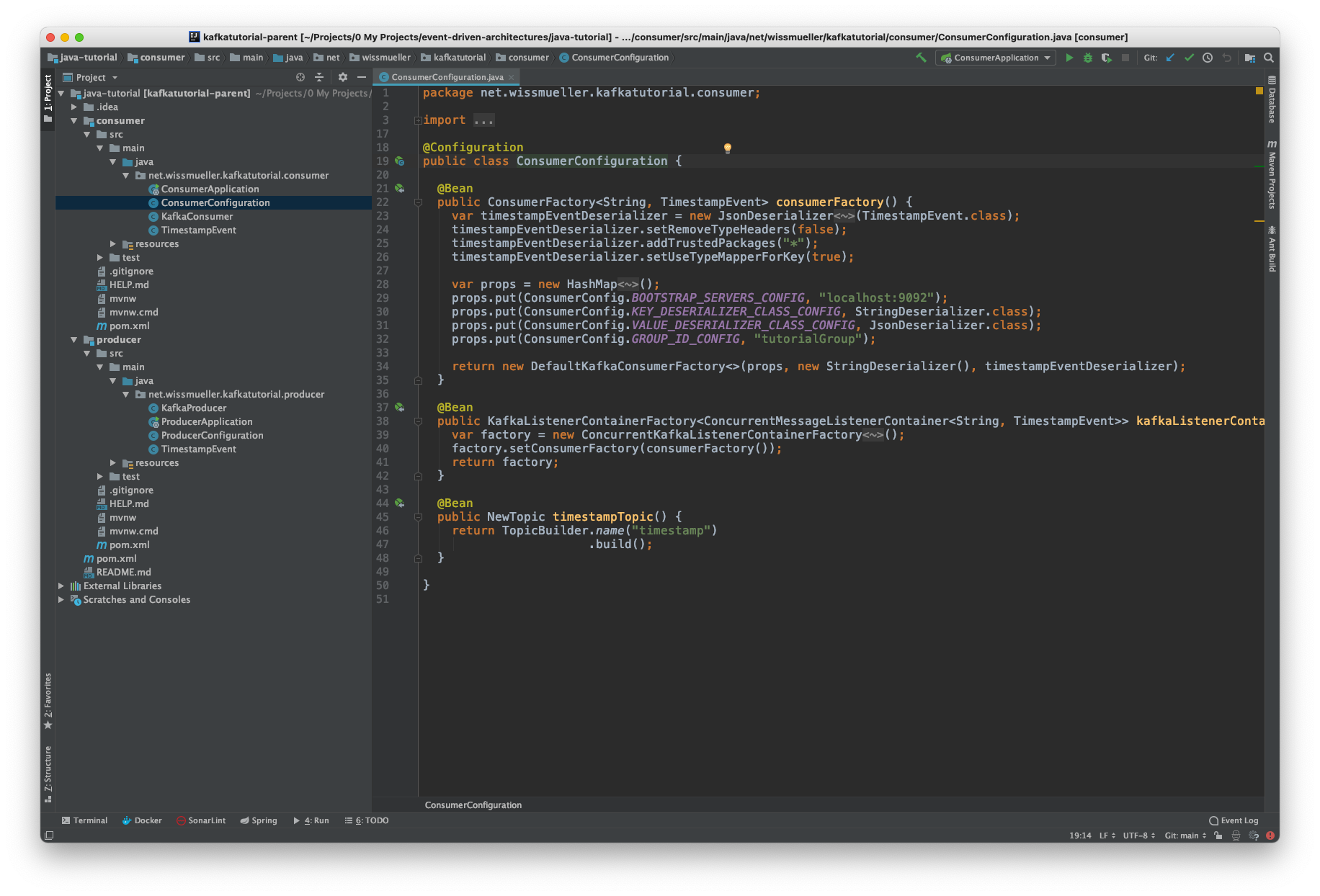

}The configuration is in ConsumerConfiguration.java, which, analogous to the producer, specifies:

- how to connect to Kafka

- which serialisers to use

- the format of Kafka events

package net.wissmueller.kafkatutorial.consumer;

// imports...

public class ConsumerConfiguration {

@Value("${kafka.bootstrap-servers}")

private String bootstrapServers;

@Bean

public Map<String, Object> consumerConfigs() {

Map<String, Object> props = new HashMap<>();

props.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, bootstrapServers);

props.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class);

return props;

}

@Bean

public ConsumerFactory<String, String> consumerFactory() {

return new DefaultKafkaConsumerFactory<>(consumerConfigs());

}

@Bean

public KafkaListenerContainerFactory<ConcurrentMessageListenerContainer<String, String>> kafkaListenerContainerFactory() {

ConcurrentKafkaListenerContainerFactory<String, String> factory = new ConcurrentKafkaListenerContainerFactory<>();

factory.setConsumerFactory(consumerFactory());

return factory;

}

@Bean

public NewTopic timestampTopic() {

return TopicBuilder.name("timestamp")

.build();

}

}Here we need to set the following properties in application.properties:

spring.kafka.bootstrap-servers=localhost:9092

spring.kafka.consumer.group-id=tutorialGroup

Last but not least, we have the consumer in KafkaConsumer.java. We only have to specify a listener on a topic by using the @KafkaListener annotation and define the action. In this case, the timestamp is logged.

package net.wissmueller.kafkatutorial.consumer;

// imports...

@Component

public class KafkaConsumer {

private static final Logger log = LoggerFactory.getLogger(KafkaConsumer.class);

@KafkaListener(topics = "timestamp")

void listener(String timestamp) {

log.info("Received: {}", timestamp);

}

}Run Example Code

It ‘s time to run everything. Remember the following project structure:

code/

- docker-compose.yml

- producer/

-- pom.xml

--...

- consumer/

-- pom.xml

--...

In the code directory, Kafka and Zookeeper are started via docker-compose:

docker-compose up -d

In the producer directory, start the service with:

mvn spring-boot:run

Finally, in a new terminal window, change into the consumer directory and start the service the same way:

mvn spring-boot:run

You should see output similar to the following. On the left is the producer log output and on the right is the consumer log output.

This concludes the introductory tutorial on creating an event-driven architecture using Kafka and Java Spring Boot.

The complete project code can be downloaded from here.